Background

I’m currently working on Volume II of the “The Art of Mac Malware” (TAOMM) series. This 2nd book is a comprehensive resource that covers the programmatic detection of macOS malware code via behavioral-based heuristics. In other words, it’s all about writing tools to detect malware.

There are many ways to detect malware and a multi-faced approach is of course recommended. However, one good place to start, is to enumerate all running process and for each, examine the process’ main binary for anomalies or characteristics that may reveal if it is malware.

There are a myriad of things to look at when attempting to classify a process’ binary as benign or malicious. Programmatic methods are covered in detail in the soon to be published TAOMM Volume II, but a few include: code signing information, dependencies, export/imports, and whether the binary is packed or encrypted. And while there are dedicated APIs to extract the code signing information of binary, if you’re interested in almost anything else, you’ll have to parse the binary yourself.

In this blog post, we’ll show that one of the foundational APIs related to parsing such binaries is fundamentally broken (even on the latest version of macOS, 14.3.1). And while this bug isn’t exploitable, per se, it still has security implications, especially in the context of detecting malware! 👀

Parsing Binaries

Parsing “Apple” binaries is a rather in-depth and nuanced topic. So much so, that there is a whole chapter dedicated exclusively to it in both Volume I, and in the upcoming Volume II. Here though, a high-level discussion will suffice.

The native binary format used by all Apple devices is the venerable Mach-O. If you’re interested in programmatically analyzing binaries that run on macOS (for example to extract dependencies or to see if its packed) you’ll have to leverage a Mach-O parser.

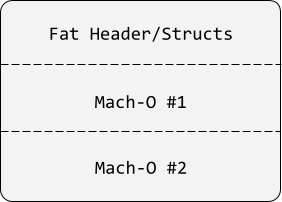

However, Mach-Os are often distributed via universal (or “fat” in Apple parlance) binaries. Such universal binaries are really just containers for multiple architecture-specific (but generally speaking logically equivalent) Mach-O binaries. In Apple parlance embedded Mach-O’s are known as slices.

The clear benefit to this is that a single file can be distributed to users that will natively run on any number of architectures. This works, because at runtime, for example when a user launches a (universal) binary the loader will parse the universal binary and automatically select the most compatible Mach-O to run.

Using macOS’s file command you can examine a binary to see if its fat, and if so, what architecture-specific Mach-Os it contains. For example, you can see that LuLu is a universal binary containing two embedded Mach-Os: one for 64-bit Intel (x86_64) systems and another for Apple Silicon (arm64):

% file /Applications/LuLu.app/Contents/MacOS/LuLu LuLu: Mach-O universal binary with 2 architectures: [x86_64:Mach-O 64-bit executable x86_64] [arm64:Mach-O 64-bit executable arm64] LuLu (for architecture x86_64): Mach-O 64-bit executable x86_64 LuLu (for architecture arm64): Mach-O 64-bit executable arm64

Circling back to the programmatic detection of malware, one we have a list of running processes we’ll want to closely examine each process’ binary. However, if the item was distributed as a fat binary, while the on-disk image will be fat/universal binary what’s actually running is just the most compatible Mach-O slice. This slice is what we’re interested in …as generally speaking there isn’t much point in examining any other of the embedded Mach-O’s. This means not only will we have to first parse the universal binary, but also identify the slice that the loader decided was the most compatible to run on the system that we’re scanning.

Though you might think this is trivial (“on Intel systems it’ll be the Intel slice, while on Apple Silicon it will be the arm slice”) it is actually a bit more complicated, as there can be any number of slices even with varying degree of compatibility for the same architecture plus even emulation cases whereas an Intel slice can actual run on an Apple Silicon system thanks to Rosetta.

This term and approach makes sense as a universal binary can have multiple slices that are to a varying degree, compatible and thus could all run on a given system. However, the but the system should select the “best” or most compatible one (which all comes down to the slice that has a CPU type and sub type that matches most closely to the host systems CPU).

Luckily, Apple has provided APIs that can help us find the relevant slice …and once we have that in hand, we can happily scan it!

Volume I of “The Art of Mac Malware” (that focuses on analysis) contains a chapter on both fat (universal) and Mach-O binaries.

You can read this (and all other chapters of Vol I) online for free:

Finding the Best Slice

Efficiency for security tools is paramount. A such, when we encounter a running process that is backed by a universal binary containing multiple slices, we’re solely interested in only the slice that is actual running.

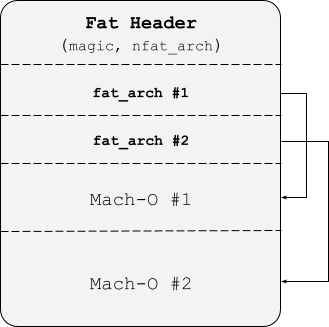

Traditionally one would use the NXFindBestFatArch API, which, as its name implies would return the best slice. Behind the scenes this APIs parses the universal binary’s header and each fat_arch structure that describes the embedded Mach-Os (slices):

Here’s the definition of the fat_arch structure, which is found in Apple’s macho/fat.h:

struct fat_arch {

int32_t cputype; /* cpu specifier (int) */

int32_t cpusubtype; /* machine specifier (int) */

uint32_t offset; /* file offset to this object file */

uint32_t size; /* size of this object file */

uint32_t align; /* alignment as a power of 2 */

};

As shown in the above image, there is one fat_arch structure for each embedded Mach-O (slice). The fat_arch structure describes the CPU type and sub type that the slice is compatible with as well as the offset of the slice in the universal (fat) file.

Lot’s more on CPU types and sub types soon, but generally speaking, the CPU type is the primary architecture or family of the CPU, whereas the sub type is the variant. For example CPUs whose type is ARM, you’ll encounter variants such as ARMv7 and ARMv7s (used in older mobile devices), and ARM64e (now used by Apple in their latest Apple Silicon devices).

Let’s now look at a snippet of code that, given a pointer to a universal (fat) header, uses the NXFindBestFatArch to find and return the best slice:

1struct fat_arch* parseFat(struct fat_header* header)

2{

3 //current fat_arch

4 struct fat_arch* currentArch = NULL;

5

6 //local architecture

7 const NXArchInfo *localArch = NULL;

8

9 //best matching slice

10 struct fat_arch *bestSlice = NULL;

11

12 //get local architecture

13 localArch = NXGetLocalArchInfo();

14

15 //swap?

16 if(FAT_CIGAM == header->magic)

17 {

18 //swap fat header

19 swap_fat_header(header, localArch->byteorder);

20

21 //swap (all) fat arch

22 swap_fat_arch((struct fat_arch*)((unsigned char*)header

23 + sizeof(struct fat_header)), header->nfat_arch, localArch->byteorder);

24 }

25

26 printf("Fat header\n");

27 printf("fat_magic: %#x\n", header->magic);

28 printf("nfat_arch: %x\n", header->nfat_arch);

29

30 //first arch, starts right after fat_header

31 currentArch = (struct fat_arch*)((unsigned char*)header + sizeof(struct fat_header));

32

33 //get best slice

34 bestSlice = NXFindBestFatArch(localArch->cputype,

35 localArch->cpusubtype, arch, header->nfat_arch);

36

37 return bestSlice;

38}

In a nutshell this code first gets the current system’s local architecture via the NXGetLocalArchInfo API. Then (after accounting for any changes in endianness), it invokes the aforementioned NXFindBestFatArch API. You can see we pass it the system’s local architecture CPU type and sub type, as well as the pointer to the current (first) fat_arch, as well as the total number of fat_arch structures.

The NXFindBestFatArch API will iterate over all slices and return a pointer to the fat_arch, that describes the most compatible embedded Mach-O. In other words, the one the loader will select and execute on the system when the universal binary is run. Recalling that each fat_arch structure contains an offset the slice it describes, we have all the information needed to go off an scan select Mach-O slice:

1//read in binary

2NSData* data = [NSData dataWithContentsOfFile:<path to some file>];

3

4//typecast

5struct fat_header*fatHeader = (struct fat_header*)data.bytes;

6

7//find best architecture/slice

8struct fat_arch* bestArch = parseFat(fatHeader);

9

10//init pointer to best slice

11struct mach_header_64* machoHeader = (struct mach_header_64*)(data.bytes + bestArch->offset);

12

13//go scan the Mach-O

…as is well an good, right?

The New macho_* APIs

In recent versions of macOS Apple decided to deprecate the NXFindBestFatArch API:

extern struct fat_arch *NXFindBestFatArch(cpu_type_t cputype,

cpu_subtype_t cpusubtype,

struct fat_arch *fat_archs,

uint32_t nfat_archs) __CCTOOLS_DEPRECATED_MSG("use macho_best_slice()");

As we can see in the function definition, they now recommend using macho_best_slice, which is declared in mach-o/utils.h. Here you’ll also find other new Mach-O APIs such as macho_for_each_slice and macho_arch_name_for_mach_header.

Unfortunately there isn’t much info on the macho_best_slice API:

…so let’s explore this API more!

At first blush macho_best_slice appears to be a big improvement over NXFindBestFatArch, as it abstracts away a lot of steps and low-level details, such having to load the file into memory yourself, dealing with the endianness of the headers, and looking up the current system architecture in the first place.

So now in theory you should just be able to call:

1macho_best_slice(<path to some file>, ^(const struct mach_header* _Nonnull slice, uint64_t sliceFileOffset, size_t sliceSize) {

2

3 printf("best architecture\n");

4 printf("offset: %llu (%#llx)\n", sliceFileOffset, sliceFileOffset);

5 printf("size: %zu (%#zx)\n", sliceSize, sliceSize);

6

7});Ah, one function call to get a pointer to Mach-O header, file offset and size of best slice? Amazing.

Ok, well I hate to rain on the parade, but the macho_best_slice is broken, for everybody except Apple 🤬

What’s wrong with macho_best_slice?

Following Apple’s directives I updated my code, swapping out the now deprecate NXFindBestFatArch API for the shiny new macho_best_slice.

Apparently I test my code more than Apple (which let’s not forget is one of the most valuable companies in the world) …and immediately noticed an perplexing issue.

Many of my calls to macho_best_slice would return with an error …even though I was 100% sure the path I specified definitely was universal binary containing a compatible (runnable) Mach-O. 🤔

First, here’s the code I’m using to replicate the issue:

1#import <mach-o/utils.h>

2#import <Foundation/Foundation.h>

3

4int main(int argc, const char * argv[]) {

5

6 int result = macho_best_slice(argv[1],

7 ^(const struct mach_header* _Nonnull slice, uint64_t sliceFileOffset, size_t sliceSize) {

8

9 printf("Best architecture\n");

10 printf(" Name: %s\n\n", macho_arch_name_for_mach_header(slice));

11 printf(" Size: %zu (%#zx)\n", sliceSize, sliceSize);

12 printf(" Offset: %llu (%#llx)\n", sliceFileOffset, sliceFileOffset);

13

14 });

15

16 if(0 != result)

17 {

18 printf("ERROR: macho_best_slice failed with %d/%#x\n", result, result);

19 }

20

21 return 0;

22}…it’s super simple, ya? Basically we just call the macho_best_slice API with a user-specified file, and for universal binaries that contain a compatible Mach-O slice, the callback block should be invoked.

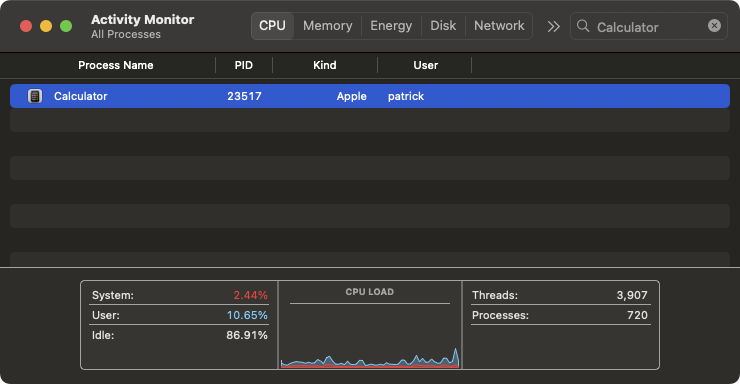

Let’s compile and run it and show that it does work …sometimes (largely to rule out I’m doing something totally wrong). We’ll use macOS’s Calculator app, which is a universal binary containing Intel and Arm slices:

% file /System/Applications/Calculator.app/Contents/MacOS/Calculator Calculator: Mach-O universal binary with 2 architectures: [x86_64:Mach-O 64-bit executable x86_64] [arm64e:Mach-O 64-bit executable arm64e] Calculator (for architecture x86_64): Mach-O 64-bit executable x86_64 Calculator (for architecture arm64e): Mach-O 64-bit executable arm64e

My MacBook has an Apple Silicon chip, thus runs a version of macOS compiled for arm64:

% sw_vers ProductName: macOS ProductVersion: 14.3.1 BuildVersion: 23D60 % uname -a Darwin Patricks-MacBook-Pro.local 23.3.0 Darwin Kernel Version 23.3.0: Wed Dec 20 21:31:00 PST 2023; root:xnu-10002.81.5~7/RELEASE_ARM64_T6020 arm64

…which means when Calculator is run, the loader should select and execute the arm64e slice. Looking at Activity Monitor you can see the ‘Kind’ column is set to “Apple”, meaning yes, the Apple Silicon slice was indeed, as expected, selected and running.

Also the macho_best_slice correctly locates and returns this (arm64e) slice to us:

% ./getBestSlice /System/Applications/Calculator.app/Contents/MacOS/Calculator Best architecture: Name: arm64e Size: 262304 (0x400a0) Offset: 278528 (0x44000)

However, if we (re)run the code on LuLu it fails:

% ./getBestSlice LuLu.app/Contents/MacOS/LuLu ERROR: macho_best_slice failed with 86/0x56

The error, (86 or 0x56) maps to EBADARCH. According to Apple this means there is a “bad CPU type in executable” and that, though the specified path exists and is mach-o or fat binary, none of the slices are loadable.

…this is (very) strange as I personally compiled LuLu with native arm64 support, and it does run quite happily on my and everybody else’s Apple Silicon systems!

So what’s the issue? We’ll get to it shortly, but first I noticed that the macho_best_slice did function as expected when passed any Apple binary …but always failed with EBADARCH for any 3rd-party binary. So what’s the difference? As you might have noticed in the file output for Calculator all Apple binaries have an architecture type of arm64e. On the other hand, 3rd-party binaries will just plain old arm64. As we’ll see this subtle difference ultimately triggers the bug in macho_best_slice.

Reversing macho_best_slice

Though the implementation of macho_best_slice is open-source, (you can view it here) getting to the bottom of a bug, I like to follow along in a disassembler and debugger.

In a debugger, we can set a breakpoint on macho_best_slice. Then (re)executing my simple code, we see that once the breakpoint is hit, we’re at the function within libdyld:

% lldb getBestSlice LuLu.app/Contents/MacOS/LuLu

...

(lldb) settings set -- target.run-args "LuLu.app/Contents/MacOS/LuLu"

(lldb) b macho_best_slice

Breakpoint 1: where = libdyld.dylib`macho_best_slice, address = 0x00000001804643fc

(lldb) r

Process 23832 launched: 'getBestSlice' (arm64)

Process 23832 stopped

* thread #1, queue = 'com.apple.main-thread', stop reason = breakpoint 1.1

frame #0: 0x0000000189dfc3fc libdyld.dylib`macho_best_slice

libdyld.dylib`macho_best_slice:

-> 0x189dfc3fc <+0>: pacibsp

This library’s path has traditionally been /usr/lib/system/libdyld.dylib, though now its found in the dyld cache.

Loading up libdyld.dylib and looking at macho_best_slice’s decompilation, we can see it just opens the specified file and then invokes _macho_best_slice_in_fd:

1int _macho_best_slice(int arg0, int arg1) {

2 ...

3 r0 = open(arg0, 0x0);

4 if (r0 != -0x1) {

5 _macho_best_slice_in_fd(r0, r19);

6 close(r0);

7 ...

8 return r0;

9}…this, as expected matches its source code:

1int macho_best_slice(const char* path, void (^bestSlice)(const struct mach_header* slice, uint64_t sliceFileOffset, size_t sliceSize)__MACHO_NOESCAPE)

2{

3 int fd = ::open(path, O_RDONLY, 0);

4 if ( fd == -1 )

5 return errno;

6

7 int result = macho_best_slice_in_fd(fd, bestSlice);

8 ::close(fd);

9

10 return result;

11}The _macho_best_slice_in_fd function invokes various dyld3 functions, before invoking macho_best_slice_fd_internal:

1int macho_best_slice_in_fd(int fd, void (^bestSlice)(const struct mach_header* slice, uint64_t sliceFileOffset, size_t sliceSize)__MACHO_NOESCAPE)

2{

3 const Platform platform = MachOFile::currentPlatform();

4 const GradedArchs* launchArchs = &GradedArchs::forCurrentOS(false, false);

5 const GradedArchs* dylibArchs = &GradedArchs::forCurrentOS(false, false);

6 ...

7

8 return macho_best_slice_fd_internal(fd, platform, *launchArchs, *dylibArchs, false, bestSlice);

9}As dyld is open source we can take a look at the dyld functions such as GradedArchs::forCurrentOS.

1const GradedArchs& GradedArchs::forCurrentOS(bool keysOff, bool osBinariesOnly)

2{

3#if __arm64e__

4 if ( osBinariesOnly )

5 return (keysOff ? arm64e_keysoff_pb : arm64e_pb);

6 else

7 return (keysOff ? arm64e_keysoff : arm64e);

8#elif __ARM64_ARCH_8_32__

9 return arm64_32;

10#elif __arm64__

11 return arm64;

12#elif __ARM_ARCH_7K__

13 return armv7k;

14#elif __ARM_ARCH_7S__

15 return armv7s;

16#elif __ARM_ARCH_7A__

17 return armv7;

18#elif __x86_64__

19 return isHaswell() ? x86_64h : x86_64;

20#elif __i386__

21 return i386;

22#else

23 #error unknown platform

24#endif

25}As we’re executing on an arm64e system and GradedArchs::forCurrentOS is invoked with false for both its arguments, this method will return arm64e. We can confirm this in a debugger, by printing out the return value (found in the x0 register):

% lldb getBestSlice LuLu.app/Contents/MacOS/LuLu

...

* thread #1, queue = 'com.apple.main-thread', stop reason = breakpoint 1.1

frame #0: 0x0000000189df3240 libdyld.dylib`dyld3::GradedArchs::forCurrentOS(bool, bool)

libdyld.dylib`dyld3::GradedArchs::forCurrentOS:

-> 0x189df3240 <+0>: adrp x8, 13

(lldb) finish

Process 27157 stopped

* thread #1, queue = 'com.apple.main-thread', stop reason = step out

frame #0: 0x0000000189dfc490 libdyld.dylib`macho_best_slice_in_fd + 48

libdyld.dylib`macho_best_slice_in_fd:

-> 0x189dfc490 <+48>: mov x22, x0

(lldb) reg read $x0

x0 = 0x0000000189e00a88 libdyld.dylib`dyld3::GradedArchs::arm64e

As we can see, this is a variable of type const GradedArchs that look in the source code, we can see been set in the following manner: GradedArchs::arm64e = GradedArchs({GRADE_arm64e, 1});. GRADE_arm64e is #defined as such: CPU_TYPE_ARM64, CPU_SUBTYPE_ARM64E, false

Back to the macho_best_slice_fd_internal function, we see it contains the core logic to identify the best slice. It’s a long function that you can view in its entirety here. However, in the context of tracking down this bug, much of it is not relevant …but let’s still walk thru relevant parts.

After mapping in the file its examining to find the best slice, it calls the dyld3::FatFile::isFatFile function to make sure it really looking at a universal file. You can take a look at this open-source function if you’re interested. Not to surprising it simply checks for the FAT magic values FAT_MAGIC and FAT_MAGIC_64.

As the binary we passed, LuLu, is a universal binary, the isFatFile method, as expected returns a non-zero value (the address of where the universal binary has been mapped into memory):

(lldb) x/i $pc

0x189dfc558: bl 0x189df2e20 ; dyld3::FatFile::isFatFile(void const*)

-> 0x189dfc55c cbz x0, 0x189dfc65c

Target 0: (getBestSlice) stopped.

(lldb) reg read $x0

x0 = 0x0000000100010000

Moving on, it then makes use of the dyld3::FatFile::forEachSlice function iterate over each embedded slice. This function takes a callback block to invoke for each slice. The block passed by macho_best_slice_fd_internal to dyld3::FatFile::forEachSlice performs the following for each slice:

- Invokes

dyld3::MachOFile::isMachO - Invokes

dyld3::GradedArchs::grade

1ff->forEachSlice(diag, statbuf.st_size, ^(uint32_t sliceCpuType, uint32_t sliceCpuSubType, const void* sliceStart, uint64_t sliceSize, bool& stop) {

2 if ( const MachOFile* mf = MachOFile::isMachO(sliceStart) ) {

3 if ( mf->filetype == MH_EXECUTE ) {

4 int sliceGrade = launchArchs.grade(mf->cputype, mf->cpusubtype, isOSBinary);

5 if ( (sliceGrade > bestGrade) && launchableOnCurrentPlatform(mf) ) {

6 sliceOffset = (char*)sliceStart - (char*)mappedFile;

7 sliceLen = sliceSize;

8 bestGrade = sliceGrade;

9 }

10 }

11 ...As these functions are part of dyld they are both open-source. The isMachO method performs a few basic sanity checks such as looking for Mach-O magic values in order to ascertain that the current slice is a really Mach-O. The grade function is more interesting, and we’ll dive into it shorty, but basically it checks if the specified CPU type and sub type (for example for a Mach-O slice) is compatible with the current system.

If none of the slices pass the isMachO method and “grade” check, the macho_best_slice_fd_internal sets a return variable to EBADARCH (0x56), as shown in the following disassembly:

10x189dfc61c cbz w8, loc_189dfc700

2...

3

40x189dfc700 mov w21, #0x56 ;EBADARCH

5...

6

70x189dfc748 mov x0, x21

8...

90x189dfc764 retabWe can also see this source:

1if ( bestGrade != 0 ) {

2 if ( bestSlice )

3 bestSlice((MachOFile*)((char*)mappedFile + sliceOffset), (size_t)sliceOffset, (size_t)sliceLen);

4}

5else

6 result = EBADARCH;This is eventually propagated back to macho_best_slice which is then returned to the caller. This explains where the error code that our program printed out is coming from. But we still don’t know why.

Recall we’ve invoked macho_best_slice on LuLu’s universal binary that contains two slices. Each slice passes the isMachO check, and the first slice (the x86_64 Intel Mach-O) as expected fails the grade method …as well, Intel binaries aren’t natively compatible on Apple Silicon systems. So far, so good. However, the 2nd slice is a arm64 Mach-O which is definitely compatible …yet dyld3::GradedArchs::grade still returns 0 (false/fail). WTF!?

Looking at the source code for the dyld3::GradedArchs::grade method (found in the dyld MachOFile.cpp file), ultimately gives us insight in what is going wrong.

1int GradedArchs::grade(uint32_t cputype, uint32_t cpusubtype, bool isOSBinary) const

2{

3 for (const CpuGrade* p = _orderedCpuTypes; p->type != 0; ++p) {

4 if ( (p->type == cputype) && (p->subtype == (cpusubtype & ~CPU_SUBTYPE_MASK)) ) {

5 if ( p->osBinary ) {

6 if ( isOSBinary )

7 return p->grade;

8 }

9 else {

10 return p->grade;

11 }

12 }

13 }

14 return 0;

15}From the code you can see that given a CPU type and sub type it checks these against the values in a (GradedArchs) class array named _orderedCpuTypes. If there is match a “grade” is returned, otherwise 0 (fail).

From the source code we know this grade method was invoked via the launchArchs object:

1const GradedArchs* launchArchs = &GradedArchs::forCurrentOS(false, false);

2

3...

4int sliceGrade = launchArchs.grade(mf->cputype, mf->cpusubtype, isOSBinary);Recall the call to GradedArchs::forCurrentOS returned and object initialize via GradedArchs({GRADE_arm64e, 1}) (where GRADE_arm64e is set to CPU_TYPE_ARM64, CPU_SUBTYPE_ARM64E, false

Both universal and Mach-O binaries (either stand-alone or slices) contain a header that contains the CPU type and sub type that they are compatible with. We already saw this in the fat_arch structure that describes each embedded Mach-O slice. However you’ll also find the (same) CPU information in the Mach-O header whose type is mach_header (define in macho/loader.h):

struct mach_header {

uint32_t magic; /* mach magic number identifier */

int32_t cputype; /* cpu specifier */

int32_t cpusubtype; /* machine specifier */

uint32_t filetype; /* type of file */

uint32_t ncmds; /* number of load commands */

uint32_t sizeofcmds; /* the size of all the load commands */

uint32_t flags; /* flags */

};

As we noted, the dyld3::GradedArchs::grade function takes this CPU information for each embedded slice and checks to see if its compatible with the CPU of the current system. Logically, this makes total sense.

But of course the devil is the details …and details are best observed in a debugger. Here we’ll focus on the _orderedCpuTypes array that is defined in dyld’s MachOFile.h file, within the GradedArchs class:

// private:

// should be private, but compiler won't statically initialize static members above

struct CpuGrade { uint32_t type; uint32_t subtype; bool osBinary; uint16_t grade; };

const CpuGrade _orderedCpuTypes[3]; // zero terminated

};

Back to the debugger. First we set a breakpoint on dyld3::GradedArchs::grade. In the case of LuLu, which recall has two embedded slices, this is called twice.

% lldb getBestSlice LuLu.app/Contents/MacOS/LuLu

...

* thread #1, queue = 'com.apple.main-thread', stop reason = breakpoint 13.1

frame #0: 0x0000000189df31ec libdyld.dylib`dyld3::GradedArchs::grade(unsigned int, unsigned int, bool) const

libdyld.dylib`dyld3::GradedArchs::grade:

(lldb) reg read $w1

w1 = 0x01000007

(lldb) reg read $w2

w2 = 0x00000003

As grade is a C++ method, the first argument is the class object pointer, here a GradedArchs. This means the CPU type and sub type can be found in the 2nd and 3rd arguments respectively, which on arm64 will be in the x1 and x2 registers respectively. However since the grade method declares these arguments as uint32_ts they’ll actually be in w1 and w2 (which represent the lower 32-bits of the 64-bit x registers).

In the debugger output you can see these values are 0x01000007 and 0x00000003.

Using otool to dump LuLu’s universal header we see:

otool -h LuLu.app/Contents/MacOS/LuLu

LuLu (architecture x86_64):

Mach header

magic cputype cpusubtype caps filetype ncmds sizeofcmds flags

0xfeedfacf 16777223 3 0x00 2 30 4272 0x00200085

LuLu (architecture arm64):

Mach header

magic cputype cpusubtype caps filetype ncmds sizeofcmds flags

0xfeedfacf 16777228 0 0x00 2 30 4432 0x00200085

The first slice has a CPU type of 16777223, or 0x1000007 …which is exactly what we see in the debugger. This value maps to x86_64 that identifies LuLu’s first slice as an Mach-O compiled for 64-bit versions of Intel CPUs. Sub type 3 maps to CPU_SUBTYPE_I386_ALL, which means any Intel CPU.

While we’re here, lets look at the otool output for the second arm64 slice. Its CPU type is set to 16777228 (or 0x100000C) and sub type 0. This maps to CPU type CPU_TYPE_ARM64 and sub type CPU_SUBTYPE_ARM64_ALL. As its name implies, CPU_SUBTYPE_ARM64_ALL specifies compatibility with all arm64 CPU variants!

Before we head back to the debugger, here is the annotated disassembly for the dyld3::GradedArchs::grade, which will assist in our debugging endeavors:

10x189df31ec: mov x8, #0x0 ; current p index/offset

20x189df31f0: and w9, w2, #0xffffff ; cpusubtype & ~CPU_SUBTYPE_MASK

30x189df31f4: ldr w10, [x0, x8] ; p->type = _orderedCpuTypes[index]->type

40x189df31f8: cbz w10, 0x189df322c ; p->type == 0 ? -> go to done

50x189df31fc: cmp w10, w1 ; p->type == cputype ?

60x189df3200: b.ne 0x189df3220 ; no match, go to next

70x189df3204: add x10, x0, x8 ; p = _orderedCpuTypes[index]

80x189df3208: ldr w11, [x10, #0x4] ; extract p->subtype

90x189df320c: cmp w11, w9 ; p->subtype == cpusubtype ?

100x189df3210: b.ne 0x189df3220 ; no match, go to next

110x189df3214: ldrb w10, [x10, #0x8] ; extract p->osBinary

120x189df3218: cbz w10, 0x189df3234 ; !p->osBinary go to leave, returning p->grade

130x189df321c: tbnz w3, #0x0, 0x189df3234 ; !isOSBinary arg go to leave, returning p->grade

140x189df3220: add x8, x8, #0xc ; p index++

150x189df3224: cmp x8, #0x30 ; p index < 0x30

160x189df3228: b.ne 0x189df31f4 ; next

170x189df322c: mov w0, #0x0 ; return 0

180x189df3230: ret

19

200x189df3234: add x8, x0, x8 ; p = _orderedCpuTypes[index]

210x189df3238: ldrh w0, [x8, #0xa] ; return p->grade

220x189df323c: retThe main takeaway is the _orderedCpuTypes array can be found at x0 (which makes sense as it’s an instance variable of the GradedArchs class).

Grab Maria Markstedter’s excellent “Blue Fox: Arm Assembly Internals and Reverse Engineering” book.

From the definition of the _orderedCpuTypes array (in dyld’s MachOFile.h) we know it contains at maximum 3 CpuGrade structures. Also as we have the definition of the CpuGrade structure we know its size (0xC) and members (the first two being the CPU type and sub type, as 32-bit values).

Finally from the source code and disassembly, know that the _orderedCpuTypes will be zero terminated. We can see this in the source code as the loop termination condition: p->type != 0; while in the disassemble: ldr w10, [x0, x8] / cbz w10, 0x189df322c.

This is all pertinent as we can then dump _orderedCpuTypes array to see what CPUs types/sub types it contains:

(lldb) x/wx $x0 0x0100000c 0x00000002 0x00010000 0x00000000 0x00000000 0x00000000 0x00000000 0x00000000 0x00000000 0x00000000 0x00000000 0x00000000

The first (and only entry) contains CPU type: 0x0100000c (CPU_TYPE_ARM64) and sub type (CPU_SUBTYPE_ARM64E). This matches what was returned by GradedArchs::forCurrentOS (i.e. GRADE_arm64e).

Back to the debugger, we’ll ignore the first call to dyld3::GradedArchs::grade as it makes sense that the first, (the x86_64 slice), will receive a failing grade on an Apple Silicon system, as Intel code is not natively compatible.

When dyld3::GradedArchs::grade is called again, we can confirm its to grade LuLu’s arm64 slice, which we know is compatible and thus should clearly receive a passing grade:

% lldb getBestSlice LuLu.app/Contents/MacOS/LuLu

...

* thread #1, queue = 'com.apple.main-thread', stop reason = breakpoint 13.1

frame #0: 0x0000000189df31ec libdyld.dylib`dyld3::GradedArchs::grade(unsigned int, unsigned int, bool) const

libdyld.dylib`dyld3::GradedArchs::grade:

(lldb) reg read $w1

w1 = 0x0100000c

(lldb) reg read $w2

w2 = 0x00000000

Recall CPU_TYPE_ARM64 is 0x100000c and CPU_SUBTYPE_ARM64_ALL is 0x0, which are the values that the grade method is invoked with the 2nd time.

Knowing that LuLu’s arm64 slice has CPU_TYPE_ARM64 (0x100000c) and CPU_SUBTYPE_ARM64_ALL (0x0) and that the _orderedCpuTypes array only an entry for CPU_TYPE_ARM64 (0x100000c) and CPU_SUBTYPE_ARM64E (0x2), you can see that though the dyld3::GradedArchs::grade function will see that the CPU type CPU_TYPE_ARM64 is a match, there will be a sub type mismatch as when compared directly CPU_SUBTYPE_ARM64_ALL (0x0) != CPU_SUBTYPE_ARM64E (0x2).

We noted that as its name implies, CPU_SUBTYPE_ARM64_ALL specifies compatibility with all arm64 CPU variants. This means that yes, even an arm CPU with a sub type of CPU_SUBTYPE_ARM64E (e.g. Apple Silicon) can execute code whose sub type is CPU_SUBTYPE_ARM64_ALL. Thus LuLu’s 2nd arm64 slice, should be graded as ok, even though it’s sub type is CPU_SUBTYPE_ARM64_ALL.

So (finally) we understand the issue, that, depending on your view point is either:

-

The

dyld3::GradedArchs::grademethod does not take into the account the nuances of the CPU sub types of type_ALL. Namely that any CPU with a more specific sub type (e.g.CPU_SUBTYPE_ARM64E) can also still execute code compiled with a CPU sub type of*_ALL(e.g.CPU_SUBTYPE_ARM64_ALL). -

When the

GradedArchs“launchArchs” object was initialized it should have been initialized not with justGRADE_arm64e, but alsoGRADE_arm64(well also likelyGRADE_x86_64too, as Intel Mach-Os can technically run on macOS thanks to Rosetta’s emulation).

After posting this write-up, Marc-Etienne noted that bug is likely the case of the latter, whereas the wrong GradedArchs were used.

Moreover he pointed out that Apple likely should have invoked not GradedArchs::forCurrentOS but rather GradedArchs::launchCurrentOS. As we noted, the former returns just GRADE_arm64e, which means arm64 slices are deemed incompatible. We look at the latter, launchCurrentOS, below.

As Marc-Etienne pointed out, the issue is likely that the wrong GradedArchs were passed to macho_best_slice_fd_internal. Recall this incorrect grade was initialized via:

const GradedArchs* launchArchs = &GradedArchs::forCurrentOS(false, false);

…which returns only GRADE_arm64e.

However, if Apple had instead invoked GradedArchs::launchCurrentOS, grades for arm64e, arm64, and x86_64 would have been returned and our universal would have been processed correctly with the arm64 Mach-O being identified as both valid, and as the best slice.

Let’s look at the GradedArchs::launchCurrentOS method.

1const GradedArchs& GradedArchs::launchCurrentOS(const char* simArches)

2{

3#if TARGET_OS_SIMULATOR

4 // on Apple Silicon, there is both an arm64 and an x86_64 (under rosetta) simulators

5 // You cannot tell if you are running under rosetta, so CoreSimulator sets SIMULATOR_ARCHS

6 if ( strcmp(simArches, "arm64 x86_64") == 0 )

7 return launch_AS_Sim;

8 else

9 return x86_64;

10#elif TARGET_OS_OSX

11 #if __arm64__

12 return launch_AS;

13 #else

14 return isHaswell() ? launch_Intel_h : launch_Intel;

15 #endif

16#else

17 // all other platforms use same grading for executables as dylibs

18 return forCurrentOS(true, false);

19#endif

20}You can see, on macOS (TARGET_OS_OSX) which on any Apple Silicon system will have __arm64__ set, the method returns launch_AS. launch_AS is a GradedArchs containing all three grades:

const GradedArchs GradedArchs::launch_AS = GradedArchs({GRADE_arm64e, 3}, {GRADE_arm64, 2}, {GRADE_x86_64, 1});

One more thing to point out, which is, it now should make sense now why macho_best_slice succeeds for Apple binaries! Such binaries have slices with CPU sub types set to CPU_SUBTYPE_ARM64E and thus check in dyld3::GradedArchs::grade which is passed the CPU type and sub type from (just) GRADE_arm64e succeeds.

We can confirm this by invoking macho_best_slice on an Apple binary. As expected we can see in debugger that the CPU type passed to the grade method is 0x100000C / CPU_TYPE_ARM64, and the sub type is 0x80000002 which (after masking out the CPU capabilities found in the top two bits) maps to CPU_SUBTYPE_ARM64E (0x2):

% lldb getBestSlice /System/Applications/Calculator.app/Contents/MacOS/Calculator

...

* thread #1, queue = 'com.apple.main-thread', stop reason = breakpoint 13.1

frame #0: 0x0000000189df31ec libdyld.dylib`dyld3::GradedArchs::grade(unsigned int, unsigned int, bool) const

libdyld.dylib`dyld3::GradedArchs::grade:

(lldb) reg read $w1

w1 = 0x100000c

(lldb) reg read $w2

w2 = 0x80000002

…and thus macho_best_slice happily returns and our program is given the right slice:

% ./getBestSlice /System/Applications/Calculator.app/Contents/MacOS/Calculator Best architecture: Name: arm64e Size: 262304 (0x400a0) Offset: 278528 (0x44000)

Conclusion

After observing that the macho_best_slice API was broken for any 3rd party binary, we took a deep dive into its (and also dyld’s) internals to figure out exactly why.

What we found was that a “grading” algorithm used to identify compatible slices contained a fundamental flaw: It does not take into account the nuances of *_ALL CPU sub types or, was not invoked with the appropriate grades.

And while this might seem like a rather unimpactful bug, in the context of malware detection, this is not the case. As we noted at the start, security tools depend on macOS’s APIs to correctly return the correct slice in order to scan binaries for malicious code. And if the system APIs are flawed its possible that malicious binaries can avoid being scanned, and thus will pass unnoticed!

So instead of fighting the efforts of the EU to make the Apple ecosystem more open and user-friendly, maybe Apple should instead make sure its code works in the first place? 🫣

You can support them via my Patreon page!